Through KKP you can set up automatic scheduled etcd backups for your user clusters, and easily restore etcd to its previous state.

Firstly, you need to enable and configure the destination(backup bucket, endpoint and credentials). To see how, check Etcd Backup Destination Settings

Etcd Backups

When the etcd backups are configured for a seed, project admins can create automatic Etcd Backups for the project clusters. B

apiVersion: kubermatic.k8c.io/v1

kind: EtcdBackupConfig

metadata:

name: daily-backup

namespace: cluster-zvc78xnnz7

spec:

cluster:

apiVersion: kubermatic.k8c.io/v1

kind: Cluster

name: zvc78xnnz7

schedule: '@every 20m'

keep: 20

destination: s3

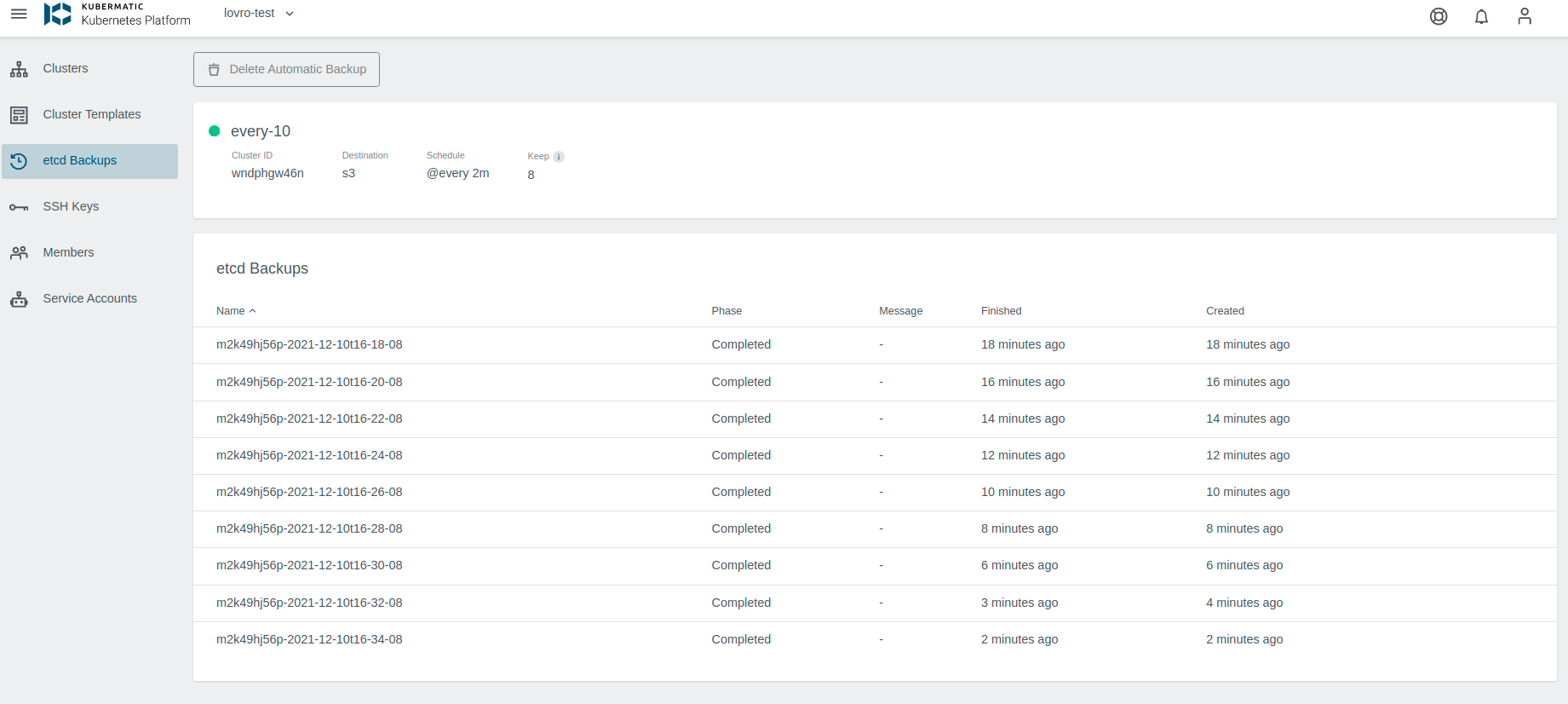

Once created, the controller will start a new backup every time the schedule demands it (i.e. at 1:00 AM in the example),

and delete backups once more than .spec.keep (20 in the example) have been created. The created backups are named based

on the configuration name and the backup timestamp $backupconfigname-$timestamp. The backup is stored at the specified backup destination,

which is configured per seed.

It is also possible to do one-time backups (snapshots).

For more detailed info check Etcd Backup and Restore.

Creating Etcd Backups

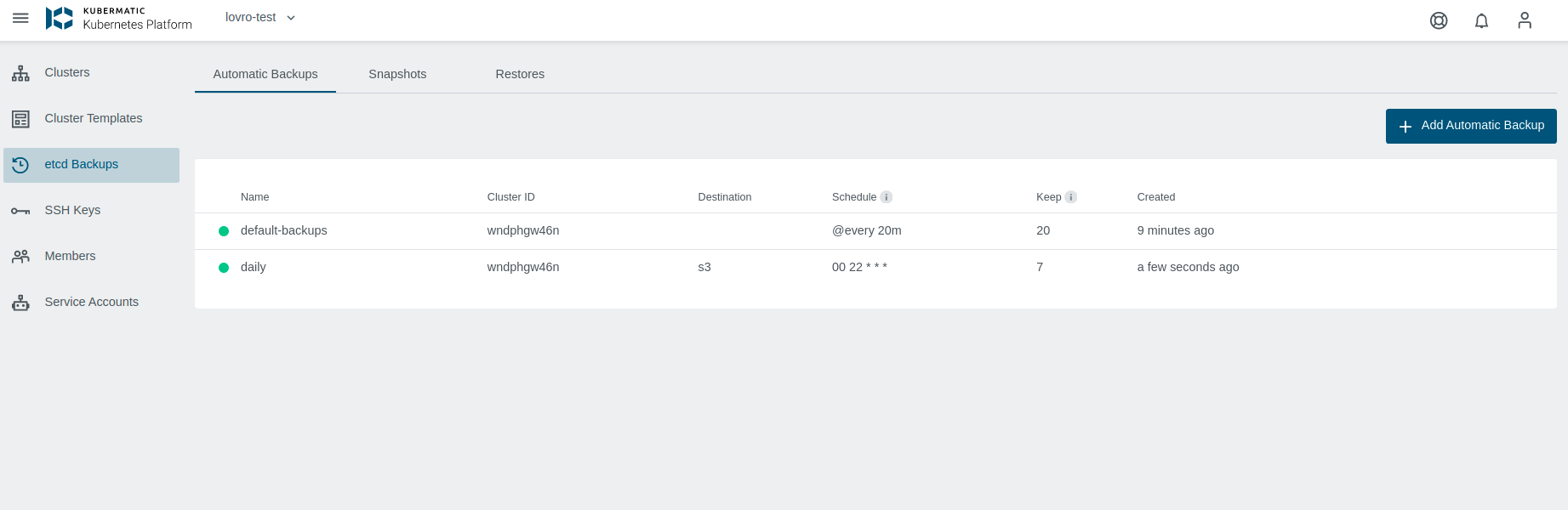

EtcdBackups and Restores are project resources, and you can manage them in the Project view.

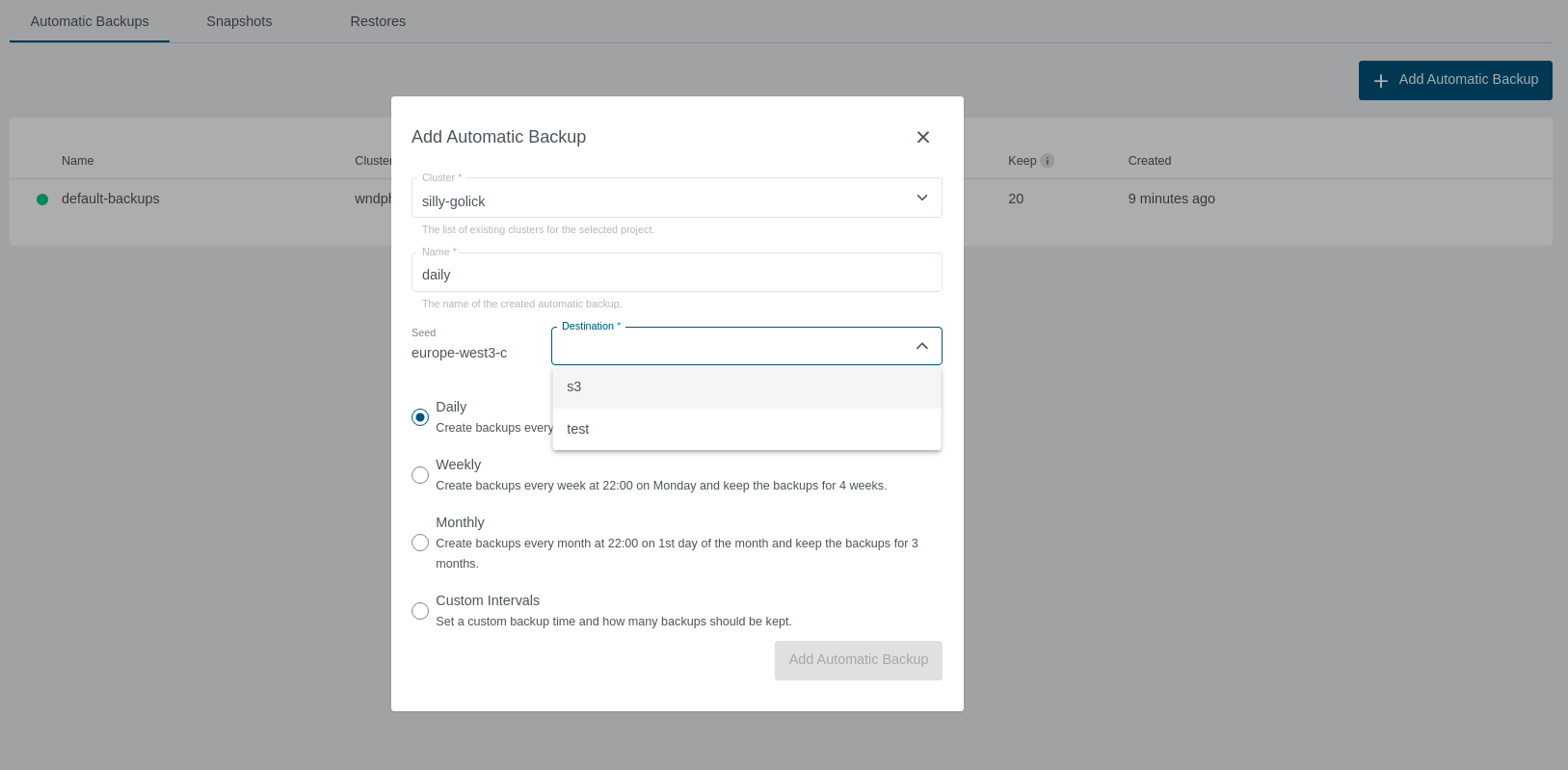

To create a backup, just click on the Add Automatic Backup button. You have a choice of preset daily, weekly or monthly backups,

or you can create a backup with a custom interval and keep time. The destination dropdown is based on the configured backup destinations

for a given seed the cluster belongs to.

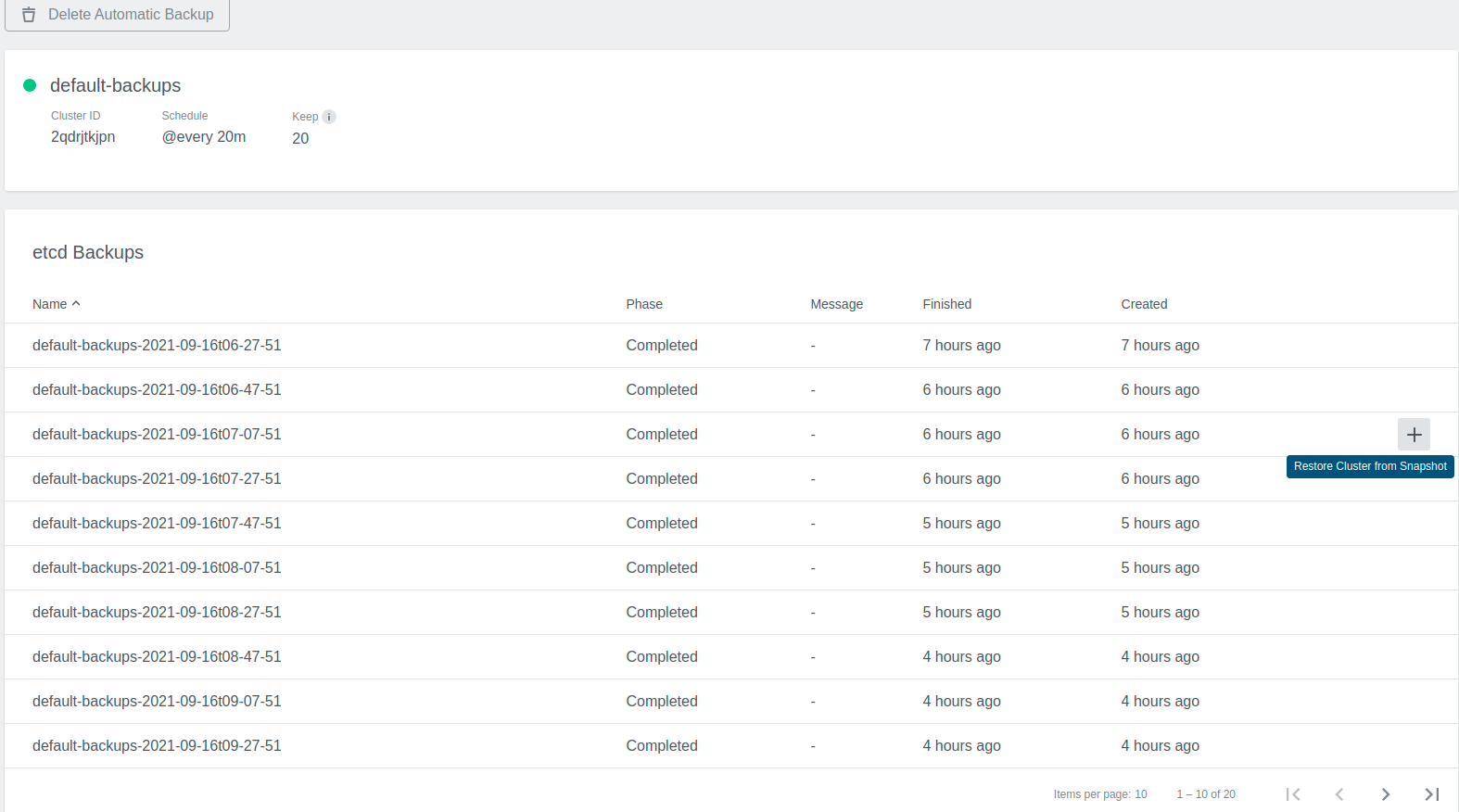

To see what backups are available, click on a backup you are interested in, and you will see a list of completed backups.

If you want to restore from a specific backup, just click on the restore from backup icon.

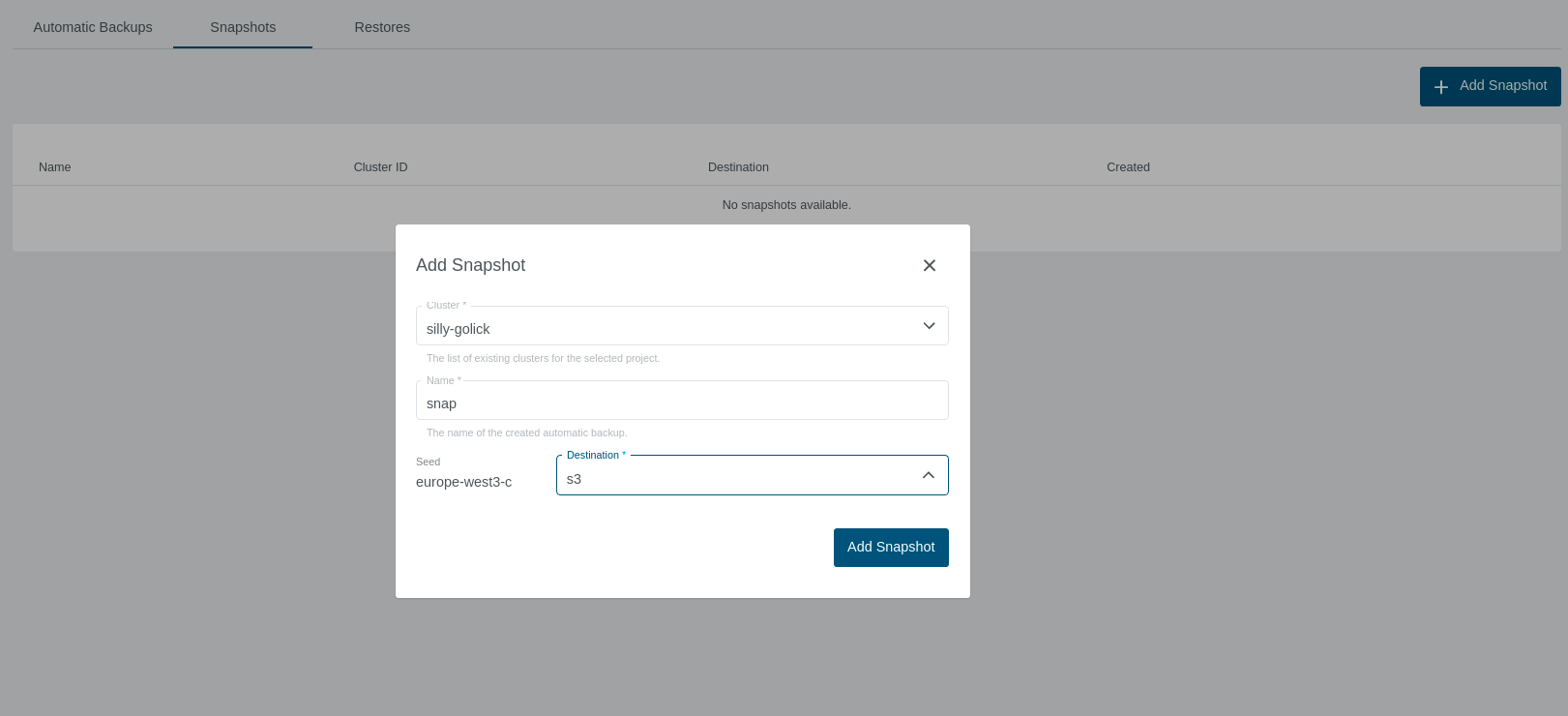

Etcd Backup Snapshots

You can also create one-time backup snapshots, they are set up similarly to the automatic ones, with the difference that they do not have a schedule or keep count set.

Backup Restores

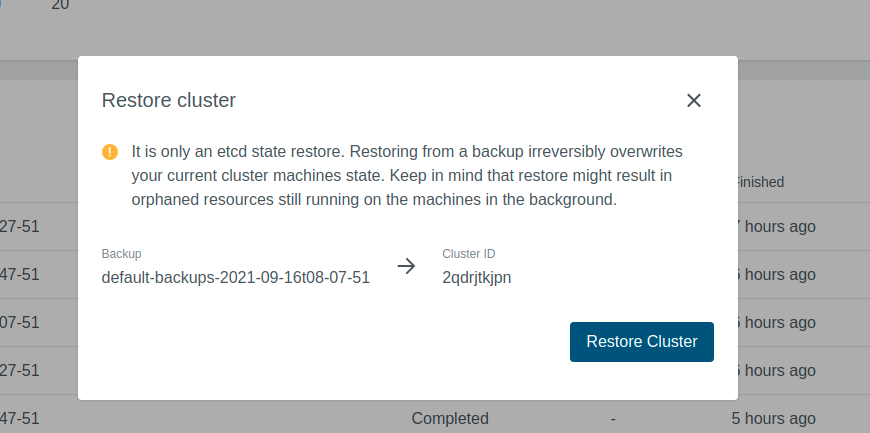

To restore a cluster etcd from a backup, go into a backup details as shown above and select the backup from which you want to restore.

What will happen is that the cluster will get paused, etcd will get deleted, and then recreated from the backup. When it’s done, the cluster will unpause.

Keep in mind that this is an etcd backup and restore. The only thing that is restored is the etcd state, not application data or similar.

If you have running pods on the user cluster, which are not in the backup, it’s possible that they will get orphaned. Meaning that they will still run, even though etcd(and K8s) is not aware of them.

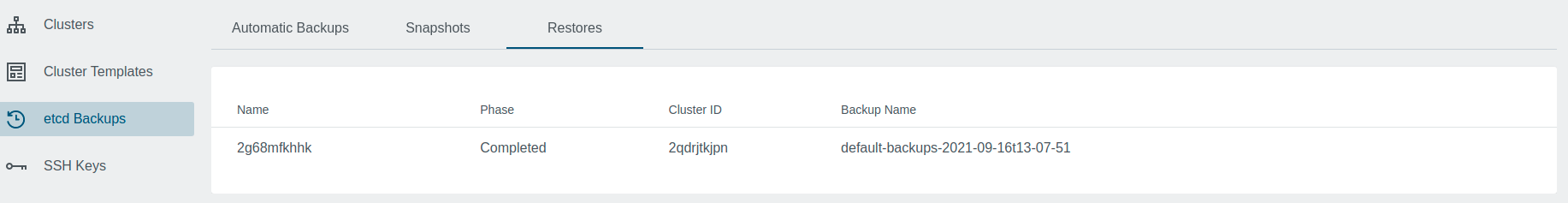

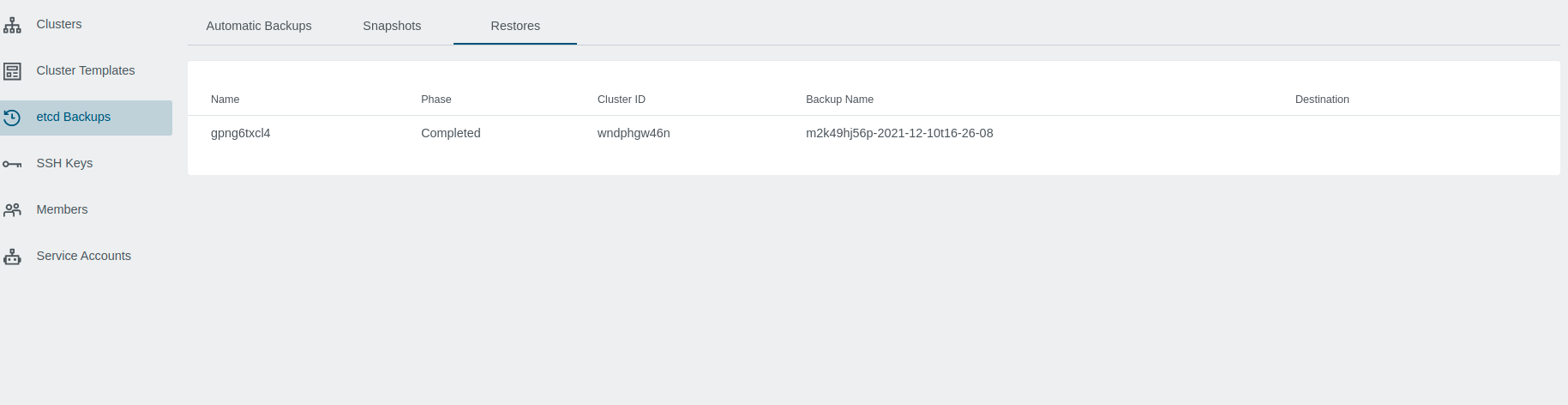

This will create an EtcdRestore object for your cluster. You can observe the progress in the Restore list.

In the cluster view, you may notice that your cluster is in a Restoring state, and you can not interact with it until it is done.

When it’s done, the cluster will get un-paused and un-blocked, so you can use it. The Etcd Restore will go into a Completed state.